Show/Hide Artifact (-)

Systematic Evaluation: New Student's Technology Wizard

Artifact not showing properly? Try a different version:

Firefox

or

Chrome

Executive Summary

View Complete Report

The New Student's Technology Wizard is a web-based software tool designed to simplify and clarify the technology setup process for incoming students to the University of Missouri. Electronic performance support systems serve as a model for the tool, which hosts myZou and MU student email web applications alongside step-by-step instructions that update as the student proceeds. In keeping with the EPSS model, the Wizard includes help icons at key points in the setup process where students may require additional support, thereby providing just enough information exactly when it is needed. The wizard requires students to proceed through setup without skipping any steps; however, the student can go back a step as needed to correct mistakes.

Stakeholders include the UM System Chief Registrar, Brenda Selman, and her staff, as well as the IT staff traditionally responsible for walking students through the process of setting up their technology accounts during Summer Welcome. The Wizard will replace that Summer Welcome process by enabling students to fully set up their own accounts and begin using them as soon as they receive their admissions letter from the university.

Evaluation outcomes indicate that students find the wizard very easy to use and visually appealing, if a bit text-heavy. Our team project portfolio includes the Problem Analysis, Design Plan and Low Fidelity Prototype, High Fidelity Prototype, and Evaluation Report.

Description (+)

Purpose

The primary goal of the evaluation is to assess the degree to which the New Student's Technology Wizard addresses the information needs of incoming students. Accordingly, the evaluation will occur in two parts: Usability testing will assess the application's approachability, ease of use, efficiency and appeal for incoming students, while an interview with Summer Welcome IT staff will identify stakeholder attitudes and concerns regarding the content, design and utility of the application.

Specific goals of the evaluation include:

Determine how well this tool helps students to complete the requisite five steps in myZou.

Determine how well this tool helps students to complete the requisite two steps in email to complete the account activation and setup process.

Determine whether the application will be helpful to incoming students as they navigate the account setup process during the admission process.

Context

Mizzou is a very technology-oriented campus. Incoming students (freshmen, transfers and distance learners) need to be informed about the primary technology tools they will rely upon during their career at MU: myZou and MU Student Email. Students need to be informed of what the tools do, why they need them, and how to get started using them.

Direct observation of students setting up their accounts during Summer Welcome reveals an information need. Currently, students receive a letter giving them their PawPrint and a temporary password, accompanied by a brief mention of myZou and no mention of student email. There are no instructions and no explanation on what to do with the information or why it is important. There's also no automated way to walk the student through the process, making it very difficult for students to get started using technology at Mizzou. Students arrive at Summer Welcome in various stages of the setup processes, often unaware that there was more they needed to do. An interview with Abbey Knaus, tech support team lead for Summer Welcome, reveals the extent of the problem (See Appendix A).

To address this situation, we propose a new software tool, called the Mizzou Student's Technology Wizard. The incoming student, upon acceptance to MU, would be provided with a link to an online application that leads them through the initial processes students should complete in myZou, and then through the steps to set up their MU Student Email.

Role

Writing/Editing portfolio sections-got each section started and contributed substantially to content

Creating and updating interactions-created the interactive prototypes using Fireworks and uploaded them

Researching-provided theoretical background and resources, including sources on EPSS model and CAMs system

Analyzing-synthesized evaluation data, put together the change log and graphs

Systematic Evaluation (+)

Evaluation Method 1: User Observation

Rationale: Usability testing with members of the target audience is the best way to assess the application's approachability, ease of use, efficiency and appeal for incoming students. Observing the users and asking them to think aloud as they interact with the application will help identify any discrepancies between the proposed design and actual users' mental models.

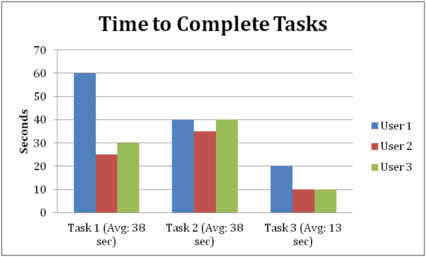

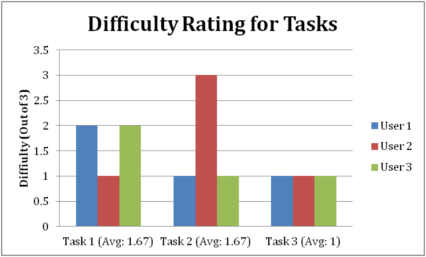

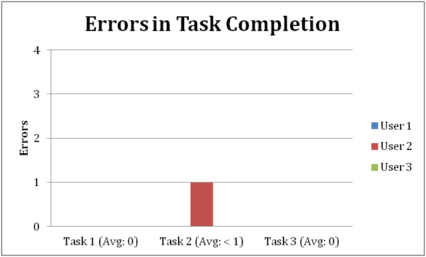

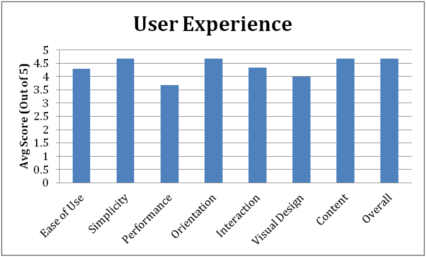

Data Analysis: The Observation Form will include time spent to complete each task, difficulty to complete (rated 1-3), and errors made, as well as a notes section for recording statements from testers as they think aloud. The Usability Form completed by testers will consist of a short survey in which testers are asked to rate how strongly they agree or disagree with a list of statements regarding the application. Results of these two methods of data collection will be analyzed via four graphs: Time to Complete Tasks, Difficulty Rating for Tasks, Errors in Task Completion, and User Experience.

Evaluation Method 2: IT Staff Interview

Rationale: Returning to stakeholders and subject matter experts (our IT staff) as part of the evaluation is crucial in order to determine whether the New Student's Technology Wizard fully meets the current information need or needs additional changes before it can fulfill its role as an account setup tool for students. Listening to stakeholder attitudes and concerns regarding the content, design and utility of the application will both increase buy-in on the idea and allow us to improve it.

Data Analysis: The interview will directly address evaluation questions. Because we are only conducting a single interview at this time, data analysis needs are limited. The results will be compared to usability test results and applied directly to improving the prototype. Further interviews with Summer Welcome and other IT staff will be conducted at later stages in application development when the wizard's interaction with the actual myZou and student email sites can be tested.

Evaluation Results

Results from the user observation and usability testing form are shown below:

Our interface and interaction design changed over the course of peer review and evaluation to include more detailed and extensive support such as tooltips and come closer to the model of an Electronic Performance Support System.

Based on peer review of the low fidelity prototype, we added the Tech Support contact info to the footer for each screen and changed the color scheme and navigation structure.

Rationale: Improves performance support, visual design, and navigation

-

Based on peer review of the high fidelity prototype, we incorporated rollover tooltips and added a back button at the bottom of all step-by-step instructions.

Rationale: Improves performance support, content, and navigation

-

Based on user testing, we added an explanation to the intro screen saying that myZou and email are separate but the wizard will set up both. We also added buttons to the final screen to take students directly to the myZou and email sites.

Rationale: Improves content and performance support

-

Based on the evaluation interview as well as feedback from user testing, we added a tooltip to explicitly state what FERPA is, provided a skip button for AAA, and added a tooltip explaining the consequences of skipping this step.

Rationale: Improves performance support, interaction, and content

User testing proved to be the most valuable evaluation method, as it allowed us to get feedback from the target audience and adapt the prototype accordingly. It was much easier to know what tooltips as well as additional buttons and interactions were needed, when observing real users. The evaluation interview served to reiterate those results and provide guidance on the changes that would most appropriately suit the identified needs.

Reflection (+)

In implementing usability evaluation, we learned that the target audience, while largely appreciative of the wizard's design and functionality, also desired more interactivity than we had initially provided. More buttons and more specific explanations that could be accessed if desired were the primary requests. Their requests matched incredibly well with the generally accepted standards of design for performance support: the design should give users as much control as possible, including control over how much guidance they receive.

If there were more time to apply lessons learned from the evaluation, the project could potentially be improved by creating further "levels" of performance support: for instance, by hiding the introductory text until users chose to read it by clicking on a question. However, in hiding such essential text, we would need to be extremely careful and conduct extensive user testing, as this measure could just as easily cause confusion or frustration for users who needed the information and did not realize how to access it. As it is, the wizard fully supports the needed performance and provides one level of user control over information through the use of rollover tooltips.

Back to Competencies

Previous Artifact

Previous Artifact